Monkey Around With ML and Library Data -- Semantic Search (Part 2)

Semantic Search For Newsdex

This is a continuation of Part One of the “Monkey Around With ML and Library Data” series of posts. See Part One if you want to start from the beginning.

What is Newsdex?

Newsdex is an index of notable local stories as well as death notices and obituaries, created and maintained by Library staff. Use it to search for the dates and pages a story may have appeared in one of our local newspapers. Entries for events covered in newspapers earlier than 1900 are being added retrospectively, so the indexing now includes partial coverage of articles as far back as the early 1800s.

Technically, it’s an SQLite database that has been converted from MARC record data.

Thanks to Datasette, it’s possible to explore the structure of this database here: newsdex.chpl.org/Newsdex+Record+Data

If you’d like to explore this dataset for your own ML project, it’s possible to download all 1.8 million records from the project’s GitHub page: github.com/cincinnatilibrary/newsdex

What is Semantic Search?

In a nutshell, semantic search is “search with meaning”.

www.nowpublishers.com/article/Details/INR-032

Semantic search differs significantly from traditional keyword searches, in that it allows for matching documents that are semantically similar to the query. Keyword searching on the other hand relies on literal lexical matching of words. While keyword searching is very important for matching proper names, places, entities, etc, semantic search offers the ability to link other documents on contextual meaning.

For example, consider these newspaper headlines (they’re not from Newsdex in case you were wondering):

1

2

3

4

5

6

[

"Advancements in Space Exploration in 2021",

"2021: A Year of Space Exploration",

"NASA's Breakthroughs in Martian Terrain Analysis",

"New Horizons Probe Reveals Secrets of Pluto",

]

A user performs a keyword search query of:

1

"Space exploration advancements in 2021"

Results may include the first two titles:

- “Advancements in Space Exploration in 2021”

- “2021: A Year of Space Exploration”

The last two do not contain any of the literal search terms in the query, so they may be missed entirely even though they may still offer significant value to the user.

Using semantic searching, all four of these articles would likely be returned to the user as they’re all semantically similar – the fact that they don’t all share the same keywords doesn’t matter with this approach.

How is Semantic Search Performed?

Semantic search is made possible by the use of AI models that can generate output that represent things that humans can easily recognize – the intent, context, meaning of something such as a newspaper headline. This is done by creating what are known as “embeddings” or “vector embeddings” (see this post – Monkey Around With ML and Library Data Part 1 – for a brief review).

Embeddings are essentially a mathematical representation of the input that creates semantic meaning for the AI model. These embeddings are able to be compared to other embeddings – finding how similar, or dissimilar they are to one another. To perform a query, an embedding must first be created for it. The embedding is then compared to other embeddings which are often stored in what are known as “vector databases” that offer indexing and efficient searches for this purpose.

Semantic Search Basic Workflow:

-

All documents in the dataset – newspaper headlines in this case – are transformed into embeddings using an AI model.

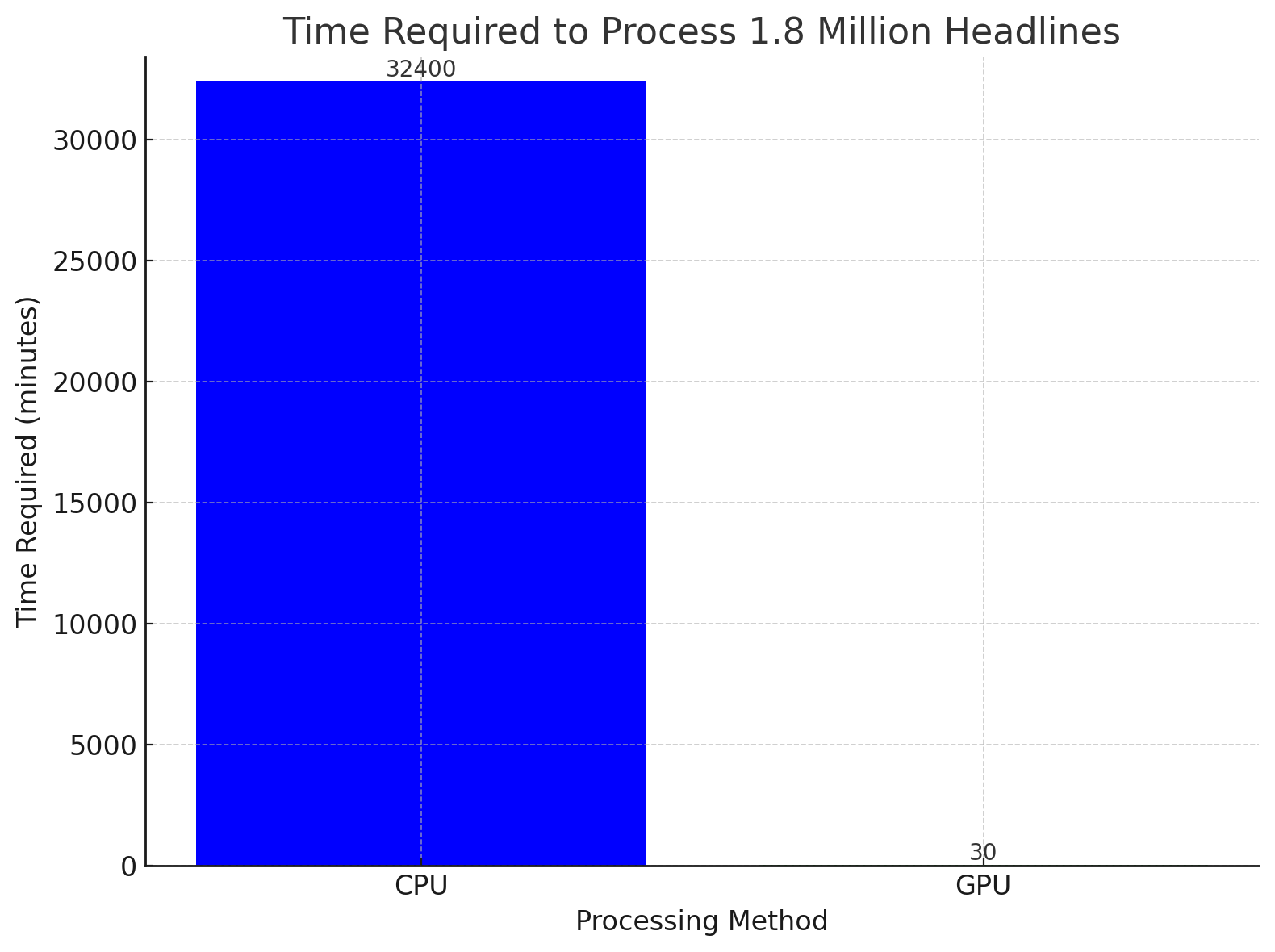

Note: It’s important to mention here that creating these embeddings is a resource-intense processing task. While this task can be completed on general purpose CPUs, for larger datasets – such as Newsdex – the process isn’t feasible unless GPU(s) are used. GPUs are preferred in machine learning tasks primarily for their parallel processing capabilities – CPUs can handle multiple tasks at once with their multi-core architectures, but GPUs excel with their hundreds to thousands of cores that can efficiently make the computations required in ML algorithms. For example, creating embeddings for 10k headlines using one of the more basic AI models was expected to take over 3 hours using an AMD Ryzen 7 CPU. Using an Nvidia Tesla T4 GPU, 1.8M headlines were able to be transformed into embeddings in under 30 minutes. More complex and capable models, combined with larger amounts of “tokens” or words in larger datasets only increase the amount of resources needed.

-

Embeddings are stored in a vector database. A vector database – such as Qdrant (https://qdrant.tech/) – indexes, and provides other methods for quickly searching for vectors presenting the closest semantic similarity for example.

-

When a user is writing and submitting a search query, that query must first be transformed into into embeddings using the same AI model that was used on the dataset in the first step.

-

The embedding is used with the vector database to retrieve which document provides the best match

Conclusion

In this post, we’ve briefly explored what semantic search can offer in terms of a creating a more nuanced, context-aware approach to discovery of library resources. In an upcoming post, I’ll cover some more of the technical aspects and how we can use open source tools to work with our headlines, and then perform some semantic searches.

The goal will be to produce a simple web interface where patrons can perform semantic searches for themselves.